Protein based biosensors: application in detecting influenza

Donal MacKernan, University College Dublin & E-CAM

An E-CAM transverse action is the development of a protein based sensor (pending patent filled in by UCD[1,2]) with applications in medical diagnostics, scientific visualisation and therapeutics. At the heart of the sensor is a novel protein based molecular switch which allows extremely sensitive real time measurement of molecular targets to be made, and to turn on or off protein functions and other processes accordingly (see Figure 1). For a description of the sensor, see this piece.

One of the applications of the protein based sensor can be to detect influenza, by modifying the sensor to measure ‘up regulated Epidermal growth factor receptor’ (EGFR) in living cells. The interest of using it for the flu, is that it is cheap, easy to use in the field by non-specialists, and accurate – that is with very low false negatives and positives compared to existing field tests. UCD’s patent pending sensors have these attributes built into their ‘all-n-one’ design, through a novel type of molecular switch, that thrived in the laboratory proof of concept phase. A funded research project to continue this development at UCD is almost certain, and likely to start within weeks.

And the answer to the current frequently asked question “can we modify this sensor to quickly detect the COVID 19 ?” is yes, provided we know amino acid sequences of antibody -epitope pairs specific to this coronavirus.

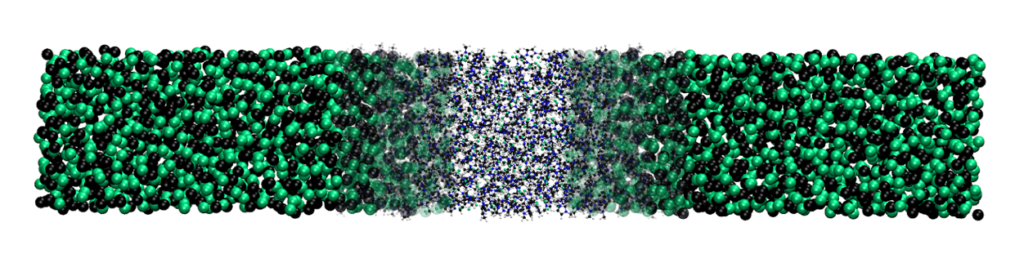

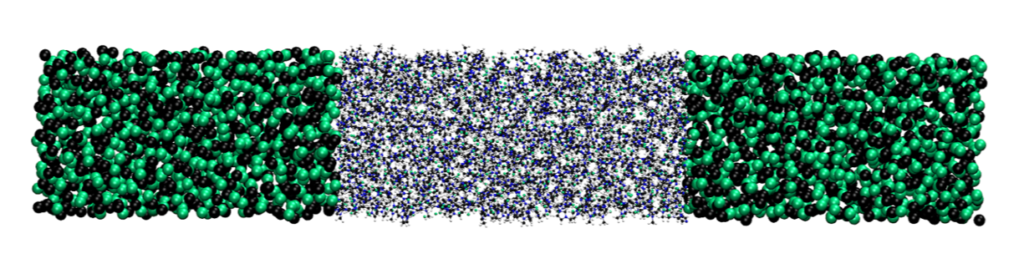

Figure 1. Schematic illustration of a widely used sensor on the left of Komatsu et al[3] and the “all-n-one” UCD sensor on the right in the “OFF” and “ON” states corresponding to the absence and presence of the target biomarker respectively. The “all-n-one” substitutes the Komatsu flexible linker with a hinge protein with charged residues q1,q2,..which are symmetrically placed on either side of the centre so as to ensure that in the absence of the target, the Coulomb repulsion forces the hinge to be open. Their location and number can be adjusted to suit each application. The spheres B and B’ denote the sensing modules which tend to bind to each other when a target biomarker or analyte is present. The spheres A and A’ denote the reporting modules which emit a recognisable (typically optical) signal when they are close or in contact with each other i.e. in the presence of a target biomarker or analyte.

[1] EP3265812A2, 2018-01-10, UNIV. COLLEGE DUBLIN NAT. UNIV. IRELAND. Inventors: Donal MacKernan and Shorujya Sanyal. Earliest priority: 2015-03-04, Earliest publication: 2016-09-09. https://worldwide.espacenet.com/patent/search?q=pn%3DEP3265812A2

[2] WO2018047110A1, 2018-03-15, UNIV. COLLEGE DUBLIN NAT. UNIV. IRELAND. Inventor: Donal MacKernan. Earliest priority: 2016-09-08, Earliest publication: 2018-03-15. https://worldwide.espacenet.com/patent/search?q=pn%3DWO2018047110A1

[3] Komatsu N., Aoki K., Yamada M., Yukinaga H., Fujita Y., Kamioka Y., Matsuda M., Development of an optimized backbone of FRET biosensors for kinases and GTPases. Mol. Biol. Cell. 2011 Dec; 22(23): 4647-56.