Accelerating the design and discovery of materials with tailored properties using first principles high-throughput calculations and automated generation of Wannier functions

A successful collaboration between the EU H2020 E-CAM and MaX Centres of Excellence, and the Swiss NCCR MARVEL

Abstract

In a recent paper[1], researchers from the Centres of Excellence E-CAM[2] and MaX[3], and the centre for Computational Design and Discovery of Novel Materials NCCR MARVEL[4], have proposed a new procedure for automatically generating Maximally-Localised Wannier functions (MLWFs) for high-throughput frameworks. The methodology and associated software can be used for hitherto difficult cases of entangled bands, and allows the electronic properties of a wide variety of materials to be obtained starting only from the specification of the initial crystal structure, including insulators, semiconductors and metals. Industrial applications that this work will facilitate include the development of novel superconductors, multiferroics, topological insulators, as well as more traditional electronic applications.

Challenge/context

Predicting the properties of complex materials generally entails the use of methods that facilitate coarse grained perspectives more suitable for large scale modelling, and ultimately device design and manufacture. When a quantum level of description of a modular-like system is required, this can often be facilitated by expressing the Hamiltonian in terms of a localised, real-space basis set, enabling it to be partitioned without ambiguity into sub-matrices that correspond to the individual subsystems. Maximally-localised Wannier functions (MLWFs) are particularly suitable in this context. However, until now generating MLWFs has been difficult to exploit in high-throughput design of materials, without the specification by users of a set of initial guesses for the MLWFs, typically trial functions localised in real space, based on their experience and chemical intuition.

Solution

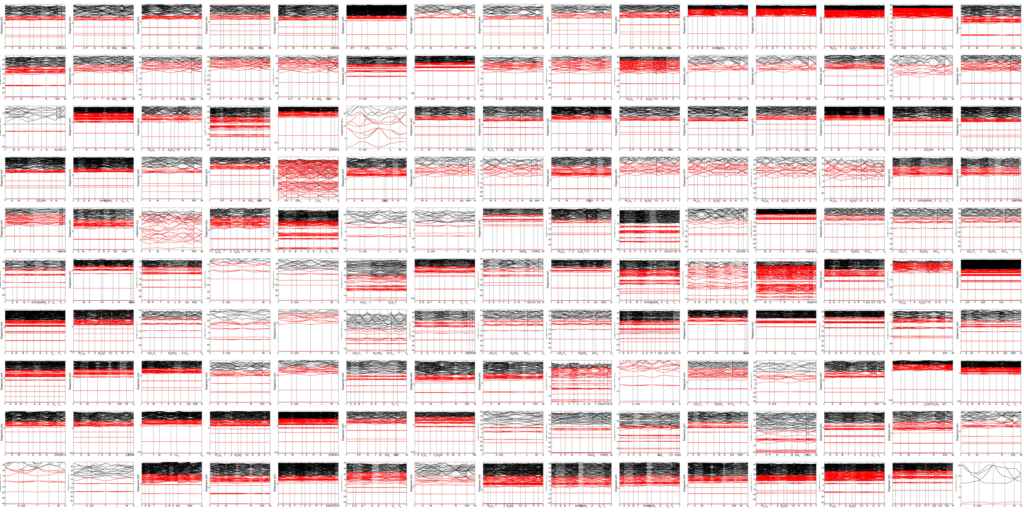

E-CAM[2] scientist Valerio Vitale and co-authors from the partner H2020 Centre of Excellence MAX[3] and the Swiss based NCCR MARVEL [4] in a recent article[1] look afresh at this problem in the context of an algorithm by Damle et al[5], known as the selected columns of the density matrix (SCDM) method, as a method to provide automatically initial guesses for the MLWF search, to compute a set of localized orbitals associated with the Kohn–Sham subspace for insulating systems. This has shown great promise in avoiding the need for user intervention in obtaining MLWFs and is robust, being based on standard linear-algebra routines rather than on iterative minimisation. In particular, Vitale et al. developed a fully-automated protocol based on the SCDM algorithm in which the three remaining free parameters (two from the SCDM method, plus the choice of the target dimensionality for the disentangled subspace) are determined automatically, making it thus parameter-free even in the case of entangled bands. The work systematically compares the accuracy and ease of use of standard methods to generate localised basis sets as (a) MLWFs; (b) MLWFs combined with SCDM’s and (c) using solely SCDM’s; and applies this multifaceted perspective to hundreds of materials including insulators, semiconductors and metals.

Benefit

This is significant because it greatly expands the scope of materials for which MLWFs can be generated in high throughput studies and has the potential to accelerate the design and discovery of materials with tailored properties using first-principles high-throughput (HT) calculations, and facilitate advanced industrial applications. Industrial applications that this work will facilitate include the development of novel superconductors, multiferroics, topological insulators, as well as more traditional electronic applications.

Background information

This module is a collaboration between the E-CAM and MaX HPC centres of excellence, and the NCCR MARVEL.

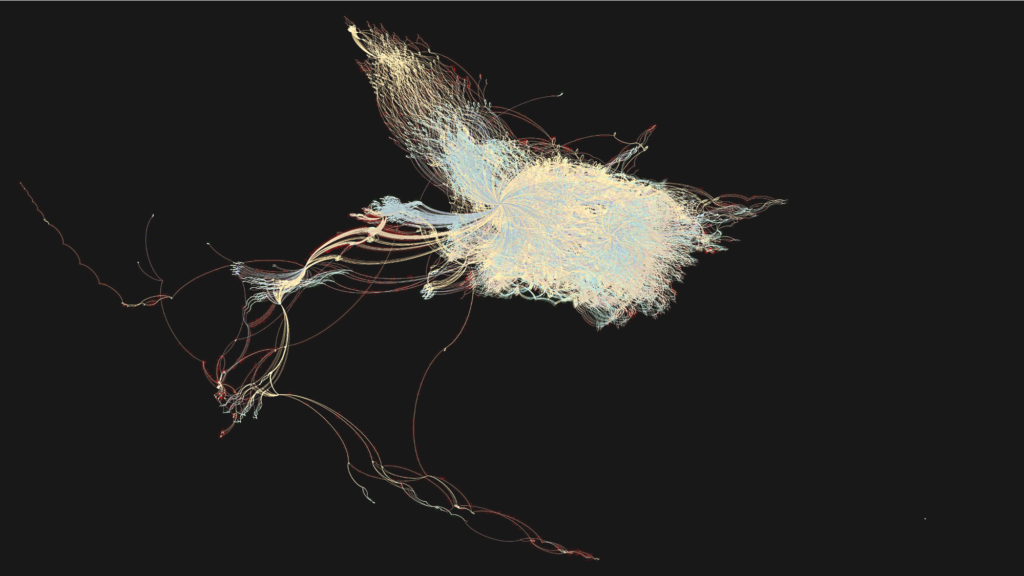

In SCDM Wannier Functions, E-CAM has implemented the SCDM algorithm in the pw2wannier90 interface code between the Quantum ESPRESSO software and the Wannier90 code. This was done in the context of an E-CAM pilot project at the University of Cambridge. Researchers have then used this implementation as the basis for a complete computational workflow for obtaining MLWFs and electronic properties based on Wannier interpolation of the Brillouin zone, starting only from the specification of the initial crystal structure. The workflow was implemented within the AiiDA materials informatics platform (from the NCCR MARVEL and the MaX CoE) , and used to perform a HT study on a dataset of 200 materials.

Source Code

See the Materials Cloud Archive entry. A downloadable virtual machine is provided that allows to reproduce the results of the associated paper and also to run new calculations for different materials, including all first-principles and atomistic simulations and the computational workflows.

Bibliography

[1] Automated high-throughput Wannierisation, Valerio Vitale, Giovanni Pizzi, Antimo Marrazzo, Jonathan R. Yates, Nicola Marzari and Arash A. Mostofi, Nature Computational Materials (2020)6:66 ; https://doi.org/10.1038/s41524-020-0312-y

[5] Compressed Representation of Kohn−Sham Orbitals via Selected Columns of the Density Matrix , Anil Damle, Lin Lin, and Lexing Ying, J. Chem. Theory Comput. 2015, 11, 1463−1469 https://pubs.acs.org/doi/10.1021/ct500985f