[button url=”https://www.e-cam2020.eu/calendar/” target=”_self” color=”primary”]Back to Calendar[/button]

If you are interested in attending this event, please visit the CECAM website here.

Workshop Description

In Discrete Element Methods the equation of motion of large number of particles is numerically integrated to obtain the trajectory of each particle [1]. The collective movement of the particles very often provides the system with unpredictable complex dynamics inaccessible via any mean field approach. Such phenomenology is present for instance in a seemingly simple systems such as the hopper/silo, where intermittent flow accompanied with random clogging occurs [2]. With the development of computing power alongside that of the numerical algorithms it has become possible to simulate such scenarios involving the trajectories of millions of spherical particles for a limited simulation time. Incorporating more complex particle shapes [3] or the influence of the interstitial medium [4] rapidly decrease the accessible range of the number of particles.

Another class of computer simulations having a huge popularity among the science and engineering community is the Computational Fluid Dynamics (CFD). A tractable method for performing such simulations is the family of Lattice Boltzmann Methods (LBMs) [5]. There, instead of directly solving the strongly non-linear Navier-Stokes equations, the discrete Boltzmann equation is solved to simulate the flow of Newtonian or non-Newtonian fluids with the appropriate collision models [6,7]. The method resembles a lot the DEMs as it simulates the the streaming and collision processes across a limited number of intrinsic particles, which evince viscous flow applicable across the greater mass.

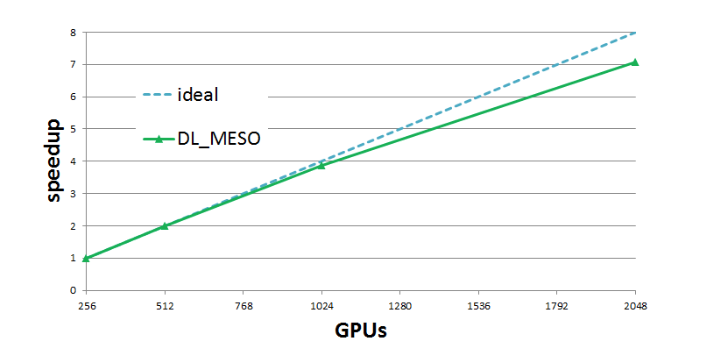

As both of the methods have gained popularity in solving engineering problems, and scientists have become more aware of finite size effects, the size and time requirements to simulate practically relevant systems using these methods have escaped beyond the capabilities of even the most modern CPUs [8,9]. Massive parallelization is thus becoming a necessity. This is naturally offered by graphics processing units (GPUs) making them an attractive alternative for running these simulations, which consist of a large number of relatively simple mathematical operations readily implemented in a GPU [8,9].

References

[1] P.A. Cundall and O.D.L. Strack, Geotechnique 29, 47–65 (1979).

[2] H. G. Sheldon and D. J. Durian, Granular Matter 6, 579-585 (2010).

[3] A. Khazeni, Z. Mansourpour Powder Tech. 332, 265-278 (2018).

[4] J. Koivisto, M. Korhonen, M. J. Alava, C. P. Ortiz, D. J. Durian, A. Puisto, Soft Matter 13 7657-7664 (2017).

[5] S. Succi,The lattice Boltzmann equation: for fluid dynamics and beyond. Oxford university press, (2001).

[6] L. S. Luo, W. Liao, X. Chen, Y. Peng, W. Zhang, Phys. Rev. E, 83, 056710 (2011).

[7] S. Gabbanelli, G.Drazer, J. Koplik, Phys. Rev. E, 72, 046312 (2005).

[8] N Govender, R. K. Rajamani, S. Kok, D. N. Wilke, Minerals Engin. 79, 152-168 (2015).

[9] P.R. Rinaldi, E. A. Dari, M. J. Vénere, A. Clausse, Simulation Modelling Practice and Theory, 25, 163-171 (2012).