[button url=”https://www.e-cam2020.eu/calendar/” target=”_self” color=”primary”]Back to Calendar[/button]

If you are interested in attending this event, please visit the CECAM website here.

Workshop Description

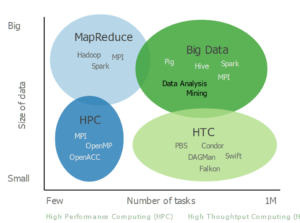

The overarching theme of this proposed E-CAM Transverse Extended Software Development Workshop is the design and control of molecular machines including sensors, enzymes, therapeutics, and transporters built as fusion proteins or nanocarrier-protein complexes, and in particular, the software development and interfacing that this entails. Several immuno-diagnostic companies and molecular biology experimental groups have expressed a strong interest in the projects at the core of this proposal. The proposed ESDW is transverse as it entails the use of methodologies from two E-CAM Scientific Workpackages: WP1 (Advanced MD/rare-events methods) and WP4 (Mesoscale/Multiscale simulation).

Fusion proteins are sets of two or more protein modules linked together where the underlying genetic codes of each module and the fusion protein itself are known or can be easily inferred. The fusion protein typically retains the functions of its components, and in some cases gains additional functions. They occur in nature, but also can be made artificially using genetic engineering and biotechnology- and used for a wide variety of settings ranging from unimolecular FRET sensors, novel immuno-based cancer drugs, enzymes [1,2] and energy conversion (for example efficient generation of alcohol from cellulose) [3,4]. Fusion proteins can be expressed using genetic engineering in cell lines, and purified for in-vitro use using biotechnology. Much of the design work is focused on how different modules are optimally linked or fused together via suitable peptides, rather than on internal changes of modules. Optimizing such designs experimentally can be done through for example random mutations, but a more controlled approach based on underlying molecular mechanisms is desirable, for which a pragmatic multiscale approach is ideally suited combining bioinformatics and homology, coarse-graining, detailed MD and rare-event based methods, and machine learning. The figure on the front of this proposal is a representative example of a fusion protein sensor designed to bind to a specific RNA nucleic acid sub-sequence, which causes an optimized hinge-like protein to close and in the process bring two fluorescence proteins together allowing the binding event to be observed optically through FRET microscopy.

Nanocarriers (NC) are promising tools for cancer immunotherapy and other diagnostic and therapeutic applications. NCs can be decorated on their surface with molecules that facilitate target-specific antigen delivery to certain antigen-presenting cell types or tumor cells. However, the target cell-specific uptake of nano-vaccines is highly dependent on the modifications of the NC itself. One of these is the formation of a protein corona [5] around NC after in vivo administration. Appropriate targeting of NC can be affected by unintended interactions of the NC surface with components of blood plasma and/or with cell surface structures that are unrelated to the specific targeting structure. The protein corona around NC may affect their organ-specific or cell type-specific trafficking as well as endocytosis and/or functional properties of the NC. Most importantly, the protein corona has been shown to interfere with targeting moieties used to induce receptor-mediated uptake of the NC, both inhibiting and enhancing internalization by specific cell types [5]. Moreover, the protein corona is taken up by the target cell, which may alter their function. Therefore, tailoring the surface properties of the NC to facilitate the adsorption of specific proteins and control the structure of the corona can help to significantly improve their performance. Modification of surface properties, e.g. via grafting olygomers, is also known to affect the preferred orientation of adsorbed proteins and, therefore, their functionality [6]. The molecular design would include the selection of appropriate NC coating and the type of antibody to optimize the NC uptake.

Mesoscale simulation is required to understand the thermodynamics and kinetics of protein adsorption on the NCs with engineered surfaces [7] and to achieve the desired structure with preferred adsorption of the selected antigen. However, the aforementioned issues often require biological and chemical accuracy that typical mesoscale models cannot achieve unless buttressed by accurate simulations at an atomistic/molecular level, rare-event methods and machine learning.

A pragmatic approach towards the enhancement of fusion proteins and NC’s is as follows.

(i) Molecular designs are initially developed and optimized as simple CG models and include the use of information theory and machine learning.

(ii) The solution of the inverse problem of building the fusion protein or the NC-protein complex to match the design requires a multiscale approach combining mesoscale modeling, molecular dynamics, rare-event methods, machine learning, homology, mutation, solvent conditions.

(iii) Iterate steps (i) and (ii) to optimize the design, and in the process collect data for machine learning driven design.

(iv) Final validation using detailed MD, rare-event methods and HPC

The ESDW we plan will over the course of two 5 day meetings with several intervening months produce multiple software modules including the following.

(a) C/C++/Modern Fortran or python based codes to build and optimize simple CG models of fusion proteins or NC-protein complexes using information theory and machine learning.

(b) Semi-automated pipelines to solve the inverse problem of building the fusion protein or the NC to match the design. This will involve interfacing with md/ mesoscale engines such as LAMMPS, Gromacs, OPENMM, EXpresso, rare-event based methods such as PLUMED, and bioinformatics code such as I-TASSER, INTFOLD.

(c) Particle insertion/deletion methods for alchemistry – mutation of amino acids, changes in the solvent and associated changes in free energy properties.

(d) Codes to add corrections to coarse-grained models (bead models/martini) using detailed atomistic data (e.g. potential of mean force for key order parameters, structure factors etc) or experimental data where available.

While this is an ambitious plan, it is worth pointing out that a similar integrated approach to protein development was already made by the lab of John Chodera [8]. While it did not include the focus on fusion proteins or NC-protein complexes or incorporate systematically coarse-graining, it demonstrates both the feasibility of what we propose here and how to achieve practical solutions. Other ideas of a systematic approach to molecular design using MD simulation have been also proposed recently [9,10].

References

[1] H. Yang et al, The promises and challenges of fusion constructs in protein biochemistry and enzymology, Appl Microbiol Biotechnol (2016)

[2] Bochicchio, Anna et al, Designing the Sniper: Improving Targeted Human Cytolytic Fusion Proteins for Anti-Cancer Therapy via Molecular Simulation, Biomedicines, 5(1),9 (2017)

[3] Y. Fujita et al, Direct and Efficient Production of Ethanol from Cellulosic Material with a Yeast Strain Displaying Cellulolytic Enzymes, Appl Environ Microbiol. 68(10): 5136–5141 (2002)

[4] M. Gunnoo et al, Nanoscale Engineering of Designer Cellulosomes, dv Mater. 28(27):5619-4 (2016)

[5] M. Bros et al. The Protein Corona as a Confounding Variable of Nanoparticle-Mediated Targeted Vaccine Delivery, Front. Immunol. 9, 1760 (2018).

[6] I. Lieberwirth et al. The Role of the Protein Corona in the Uptake Process of Nanoparticles, 24, Supplement S1, Proceedings of Microscopy & Microanalysis (2018)

[7] H Lopez et al. Multiscale Modelling of Bionano Interface, Adv. Exp. Med. Biol. 947, 173-206 (2017)

[8] DL. Parton et al Ensembler: Enabling High-Throughput Molecular Simulations at the Superfamily Scale. PLoS Comput Biol 12(6): e1004728, (2016)

[9] PV. Komarov et al. A new concept for molecular engineering of artificial enzymes: a multiscale simulation, Soft Matter 12, 689-704 (2016)

[10] BA. Thurston et al. Machine learning and molecular design of self-assembling -conjugated oligopeptides, Mol. Sim. 44, 930-945 (2018)

[11] D. Carroll. Genome Engineering with Targetable Nucleases, Annu. Rev. Biochem. 83:409–39 (2014)

E-CAM is organising a one week (16-20 July 2018)

E-CAM is organising a one week (16-20 July 2018)