Challenges to Industry of drug substance development

Written by Ana Catarina Fernandes Mendonça

Computation based methods play a growing role in all stages of accelerated medicine pipelines responding to industry challenges of drug substance development.

Abstract

APC was created In 2011 by Dr. Mark Barrett and Prof. Brian Glennon of the University College Dublin School of Chemical and Bioprocess Engineering with a mission to harness state of the art research methods & know-how to accelerate drug process development. Since then it has grown organically partnering with companies across the world, large and small, to bring medicines to market at unprecedented speed. Computation-based methods play a growing role in all stages of its medicine pipeline as explained by Dr. Jacek Zeglinski in this E-CAM interview on the challenges to Industry of drug substance development.

What is APC?

APC, the company I work for stands for Applied Process Company, and we are based in South County Dublin in Ireland, actually, just half an hour walk from the Irish Sea. APC is a global company, although it’s perhaps not very big, it is an impactful company because we collaborate with a range of big pharma companies across the world, both big and small. We provide services to them to help accelerate the development of active pharmaceutical ingredients (APIs), both small molecules, and biomolecules. We work in a number of domains of product development, starting from early-stage development through to optimization up to scale up and technology transfer.

Can you tell us how you got involved with APC?

I learned about APC in 2012, while working as a postdoc at the University of Limerick. At that time APC was a newly established start up company. Some time later two friends from my research group joined APC, so very soon I had first-hand opinion of the company. It appeared that the superior company culture and the dive-deep research focus were sufficiently strong arguments for me to join APC in early 2019.

What challenges does APC focus on, say in the context of drug development?

When we refer to drugs or drug substances, we have in mind the powder forms that are the basis for making tablets. Usually, they are crystalline but sometimes they are in amorphous form. There are a number of challenges we face. The poor solubility of active ingredients is probably the most important. Until recently people would have considered APIs to be small molecules, but nowadays they are getting larger and larger, up to 1000 grams per mole in molecular weight. They are also quite flexible and complex and that actually brings challenges related to their solubility. Most of the APIs we are handling have poor solubility in a range of solvents. That would translate to insufficient bio-availability, and make it difficult to manufacture those APIs with high productivity (i.e. producing low yield and throughput) if we could not solve the problem of solubility.

The second challenge we face is polymorphism. Crystalline materials can exist in a number of different crystal forms, those forms are interrelated in terms of stability, some of them are metastable and the most stable ones and not always easy to obtain. Sometimes one gets unwanted solvates or hydrates at the start of the development process when screening is being done in a range of different solvents. The third issue relates to particle size and shape, which can cause poor processability, filter-ability, flow-ability, compressibility, and difficulty to form tablets. Particles that are too large can have poor or inconsistent dissolution rates and bioavailability. Agglomeration is another challenge, and consequent difficulties to remove impurities, particularly when they are hidden in the voids within agglomerates.

What computational approaches do you use?

In our work in optimizing processes, we mainly do a lot of experiments but we also use computational methods. I will briefly highlight the latter, starting with our typical workflow for solvent selection for crystallization processing.

Solubility Predictions for Crystallisation Processes

Computational tools allow us to screen many more solvents than would be possible using experimentation alone. The workflow usually starts with 70 solvents, which are screened computationally regarding their solubility temperature dependence, the propensity of forming solvates or hydrates, and their utility for impurity purging, ending up with less than 10 promising solvents for experimental validation. This computational screening which is done in two to three days would take several weeks or even months were experiment used instead. We then check for the crystal form, for purity, and some other parameters that allow us to narrow down our candidates to two, three, or even one solvent for small-scale crystallisation experiments. The most optimum solvent system is further used to develop the full process followed by a final scale-up demonstration. So as you can see that computational part is a very important piece of this workflow and it really aids the solvent selection process. Regarding software, we are using mainly two platforms from Biovia, one is COSMO-therm (combined with a DFT-based program – TURBOMOLE) and the other one is Materials Studio. With COSMO we predict solubilities, and also the propensity for solvate/cocrystal and even salt formation. With Materials Studio, we search for the low energy conformations of molecules and use those conformations for solubility prediction using COSMO, so the two software codes work together. With Materials Studio, we also do lattice and cohesive energy calculations, so as to estimate thermodynamic stability of polymorphs, or find imperfections in the crystal lattice or predict the shape of crystals. We are constantly developing these capabilities and trying to do more predictive modeling. Looking at the molecular complexity of the future medicines we handle, we clearly see that there is a real need to better understand the solid-state features of those APIs, which very often are multicomponent materials, e.g. cocrystals, salt-cocrystal-hydrate hybrids etc.

How do the computational solubility predictions compare with experiment?

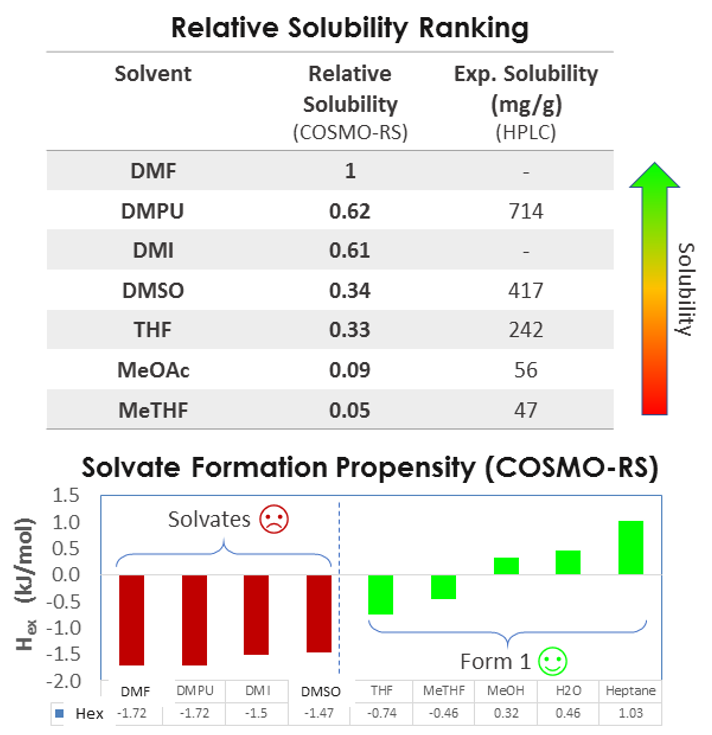

I would like to show how relative solubility predictions performed with COSMO compare with experiments for a medium-sized API molecule (500g/Mol) in a variety of solvents. As you can see there’s a good match between the rank order of solubility.

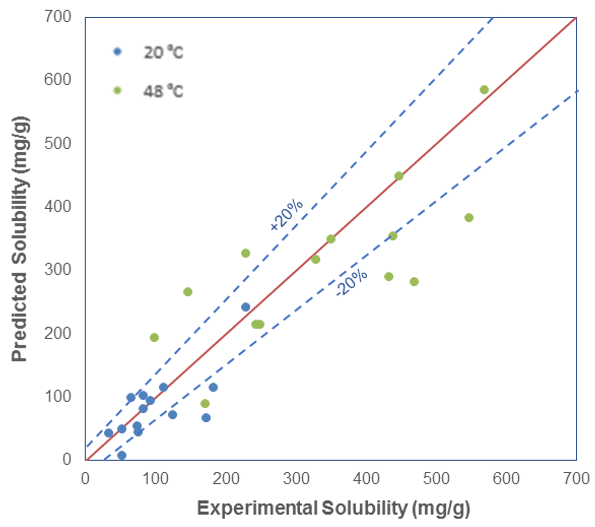

So we can predict relative solubility and, in addition, we can compute the propensity for solvate formation, that is the enthalpy of mixing, which is related to the interaction between solvent and solute. More negative values indicate exothermic events and stronger interactions, and more positive values indicate endothermic events and unfavorable mixing. So the case study presented was a very successful validation of this approach. In addition to relative solubility, we can predict absolute solubility and compare it with experiment, for example at two different temperatures, 20 and 48 °C.

As you can see, for some of the systems, the solubility is predicted very well, but for most systems, the computational and experimental results are within 20% of each other. At lower temperatures, the agreement is very good, but at higher temperatures, we see the predictions are not as accurate.

In addition to single solvents, we can predict solubility in solvent mixtures. This is a very useful application because, for many APIs, there is no single neat solvent that can be identified. In such cases, there is often a solvent mixture that can be identified which gives rise to good solubility for the API. For example, in the binary solvent mixture of water and ethyl acetate, certain solvent ratios give rise to good solubility for a variety of solutes. We can also have ternary, quaternary, and higher mixtures of solvents studied computationally.

How important is the stability of different conformers of an API in a solvent?

Conformational Aspects in Solubility Predictions

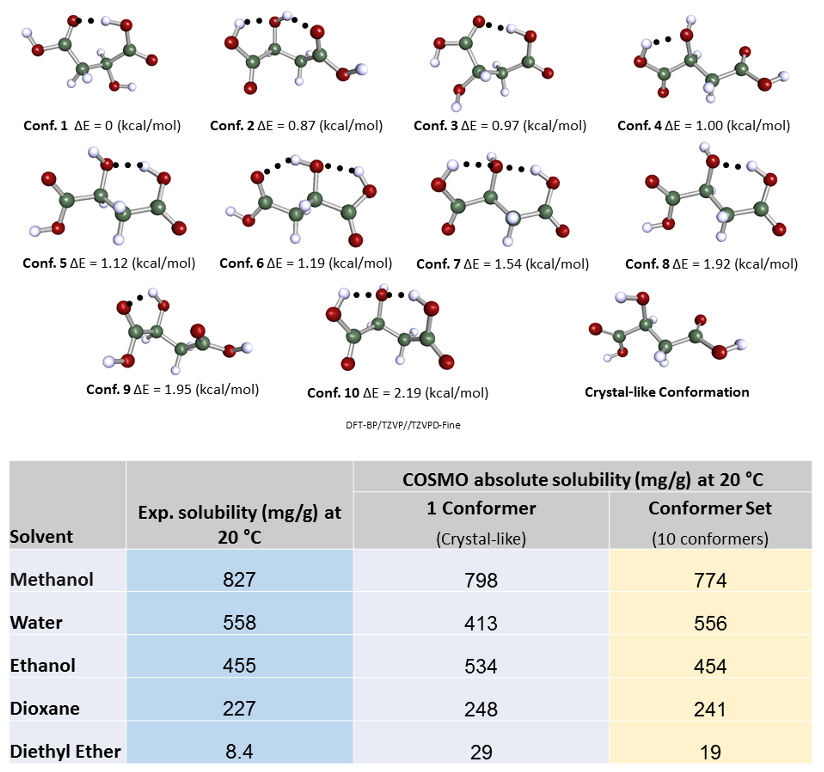

This is perhaps more fundamental, and interesting. I will show an example of solubility predictions for malic acid, and focus on different conformations. Malic acid can make a number of intra-molecular hydrogen bonds and that will be reflected in different relative conformational energies. There’s a hypothesis that regarding solubility predictions, the lowest energy conformations should be dominant because this is the confirmation that should be the most probable and most frequently occurring in solution. However, we find that this is not always the case. We can compare the predicted values of solubilities for five different solvents with experimental values for the crystal-like molecular conformation obtained in vacuum with DFT using COSMO and ten other low energy conformers identified based on their relative energetic stability.

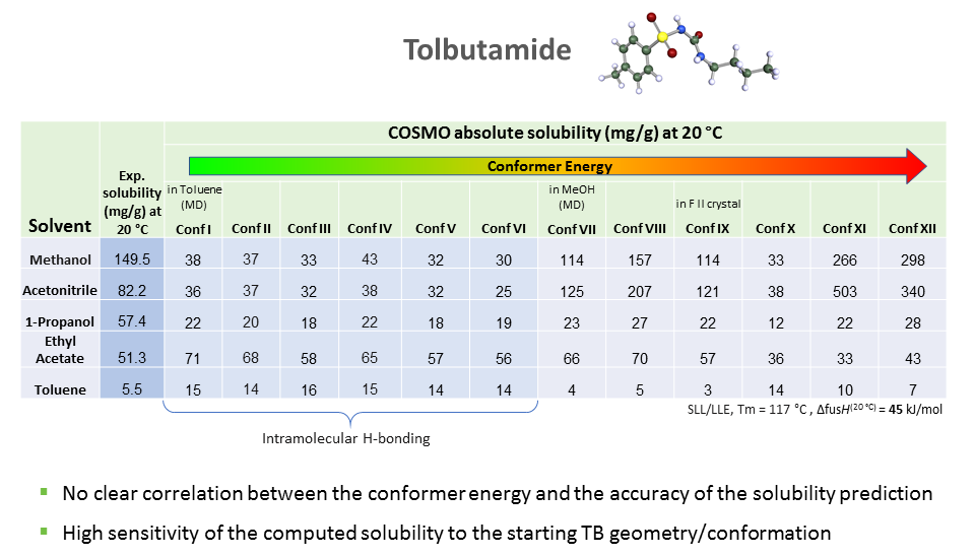

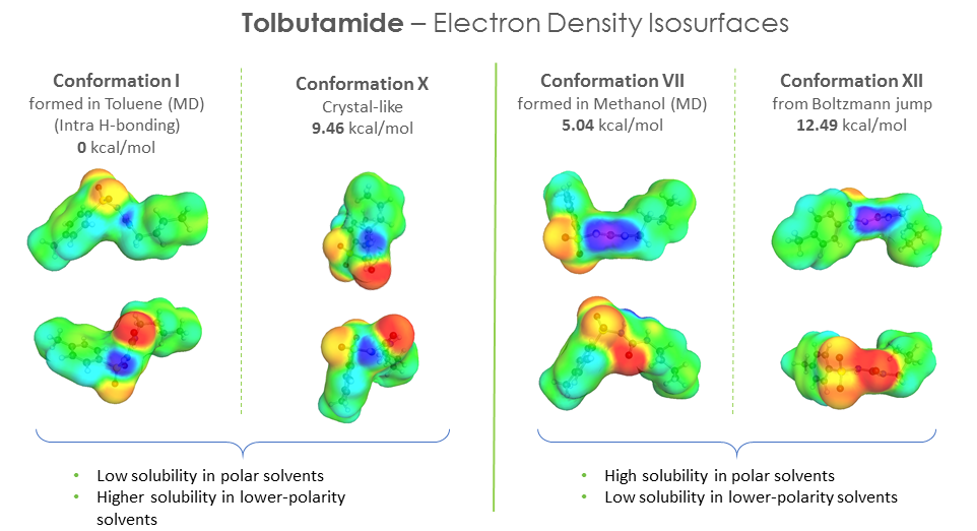

As we can see the predicted solubility compare fairly well with experiment, particularly in terms of relative ranking of solubility, as this allows the best solvent to be chosen. We see that the comparison using ten conformers is slightly better for predicting absolute solubility. However, it is not always the case that we have such good agreement for solubilities between prediction and experiment. For molecules that are larger and more flexible, the challenge increases. Another case we have studied is tolbutamide. In polar solvents such as methanol, the molecule should not have an intra-molecular hydrogen bond conformation, but in non-polar or low polarity solvents such as toluene, it should. We generated a variety of conformations some of which had intra-molecular hydrogen bonds and predicted the solubilities using those different conformations having different relative energies.

The hypothesis that the lower the energy of the conformer, the better its solubility does not apply in this case. There is no clear correlation between conformer energy and the accuracy of the solubility predicted when compared with the experiment for tolbutamide.

We also looked at the electron density iso-surfaces for the different conformers and found a correlation between the polar “domains” in the distribution (local concentration of positive and negative charge distributions) and the solubility in polar solvents and vice-versa for non-polar solvents – where the distribution of positive and negative charges were more scattered and less pronounced. It would be nice to quantify this and explore it further.

How do you predict the morphology of APIs and how well do they compare with experiments?

Crystal Shape/Morphology Prediction of Pharmaceutical Compounds

In our projects, before starting any experiments we try to predict as many properties as possible, including shape/morphology of crystals of a particular API. The predictions are made in vacuum, and very often reflect the experimental morphology. There are some exceptions, but in most cases, we find good agreement. We use an attachment energy theory which assumes that crystal growth is proportional to the attachment energy, i.e. the energy released when a slice of crystal is added to a particular facet (or lattice direction). The higher the attachment energy on the facet, the faster the growth in that direction. Based on that, we can predict the shape of the crystalline particle and the intrinsic propensity for its growth in particular directions, which will also give us the theoretical aspect ratio. It turns out that this is often related to the relative polarity of different facets; basically the number of polar atoms at different surfaces. Usually, the higher the polarity, the stronger the hydrogen bonding, and the faster will be the growth rate in that direction. However, there can be exceptions to that. For example, for molecules that are elongated and somewhat flat like Clofazimine, there are no intermolecular hydrogen bonds that occur, and molecular packing is more important. Ideally, we would like to predict the effect of the solvent on the crystal shape. Sometimes we are able to find a correlation between the surface chemistry and the morphology we are trying to predict and the impact of a particular solvent type. For example, some systems have high polarity charge distributions on certain facets, and in such cases, if we use polar-protic solvents, they would preferentially interact with polar atoms on that facet and inhibit crystal growth there. On the other hand, they could allow growth along low polarity directions. We found some experimental preliminary evidence of this, but it still has to be confirmed. We are trying to use this sort of analysis to predict which solvent will generate the desired morphology. We also explore more complex modelling approaches, including implicit and explicit solvent effects on crystal morphology and our modelling work done in this context is promising. I would like to thank the CEO of APC, Dr. Mark Barret for the opportunity to do this work, and my co-authors, Dr. Marko Ukrainczyk and Prof. Brian Glennon.

Development of an HTC-based, scalable committor analysis tool in OpenPathSampling opens avenues to investigate enzymatic mechanisms linked to Covid-19

Written by Louise Couton

The E-CAM HPC Centre of Excellence and a PRACE team in Wroclaw have teamed up to develop High Throughput Computing (HTC) based tools to enable the computational investigation of reaction mechanisms in complex systems. These tools might help us gain understanding of enzymatic mechanisms used by the SARS-CoV-2 main protease [1]

Studying reaction mechanisms in complex systems requires powerful simulations. Committor analysis is a powerful, but computationally expensive tool, developed for this purpose. An alternative, less expensive option, consists in using the committor to generate initial trajectories for transition path sampling. In this project, the main goal was to integrate the committor analysis methodology with an existing software application, OpenPathSampling [2,3] (OPS), that performs many variants of transition path sampling (TPS) and transition interface sampling (TIS), as well as other useful calculations for rare events. OPS is performance portable across a range of HPC hardware and hosting sites..

The Committor analysis is essentially an ensemble calculation that maps straightforwardly to an HTC workflow, where typical individual tasks have moderate scalability and indefinite duration. Since this workflow requires dynamic and resilient scalability within the HTC framework, OPS was coupled to E-CAM’s HTC library jobqueue_features[4] that leverages the Dask [5, 6] data analytics framework and implements support for the management of MPI-aware tasks.

The HTC library jobqueue_features proved to be resilient and to scale extremely well, meaning it can handle a very high number of simultaneous tasks: a stress test showed it can scale out to 1M tasks on all available architectures. OPS was expanded and its integration with the jobqueue features library was made trivial. In its current state, OPS can now almost seamlessly transition from use on a personal laptop to some of the largest HPC sites in Europe.

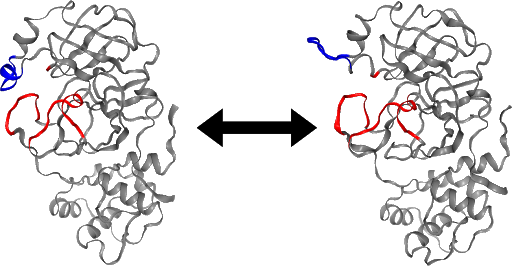

Integrating OPS and the HTC library resulted in an unprecedented parallelised committor simulation capability. These tools are currently being implemented for a committor simulation of the SARS-CoV-2 main protease. An initial analysis of the stable states, based on a long trajectory provided by D.E. Shaw Research [7] suggests that a loop region of the protein may act as a gate to the active site (Figure). This conformational change may regulate the accessibility of the active site of the main protease, and a better understanding of its mechanism could aid drug design.

The committor simulation can be used to explore the configuration space (taking more initial configurations), or to improve the accuracy of the calculated committor value (running more trajectories per configuration). Altogether, such data will provide insight into the dynamics of the protease loop region and the mechanism of its gate-like activity. In addition, the trajectories generated by the committor simulation can also be used as initial conditions for further studies using the transition path sampling approach.

References

[1] Milosz Bialczak, Alan O’Cais, Mariusz Uchronski, & Adam Wlodarczyk. (2020). Intelligent HTC for Committor Analysis. http://doi.org/10.5281/zenodo.4382017 [2] David W.H. Swenson, Jan-Hendrik Prinz, Frank Noé, John D. Chodera, and Peter G. Bolhuis. “OpenPathSampling: A flexible, open framework for path sampling simulations. 1. Basics.” J. Chem. Theory Comput. 15, 813 (2019). https://doi.org/10.1021/acs.jctc.8b00626[3] David W.H. Swenson, Jan-Hendrik Prinz, Frank Noé, John D. Chodera, and Peter G. Bolhuis. “OpenPathSampling: A flexible, open framework for path sampling simulations. 2. Building and Customizing Path Ensembles and Sample Schemes.” J. Chem. Theory Comput. 15, 837 (2019). https://doi.org/10.1021/acs.jctc.8b00627

[4] Alan O’Cais, David Swenson, Mariusz Uchronski, and Adam Wlodarczyk. Task Scheduling Library for Optimising Time-Scale Molecular Dynamics Simulations, August 2019. [5] Dask Development Team. Dask: Library for dynamic task scheduling, 2016. [6] Matthew Rocklin. Dask: Parallel computation with blocked algorithms and task scheduling. In Kathryn Hu and James Bergstra, editors, Proceedings of the 14th Python in Science Conference, pages 130 – 136, 2015.[7] No specific author. Long trajectory provided by D.E. Shaw Research. https://www.deshawresearch.com/downloads/download_trajectory_sarscov2.cgi/, 2020. [Online; accessed 22-Oct-2020].Proof of concept : recognition as a disruptive technology

Written by Ana Catarina Fernandes Mendonça

Abstract

The transformation of a beautiful idea born via simulation into a commercial opportunity is recognised as a disruptive technology. At the heart of this ongoing story is advanced simulation using massively parallel computation, rare-event methods and genetic engineering.

Proof of concept : recognition as a disruptive technology

Author: Donal Makernan, University College Dublin, Ireland

Last week I received an email asking if I would be willing to accept the ‘2021 NovaUCD Licence of the Year Award’ for the licence of the disruptive molecular switch platform technology to a US based company with an initial application as a point-of-care medical diagnostic for COVID-19 and influenza. Of course I said yes, and since then received a beautiful statue of a metal helix mounted on a black marble plinth via courier (displayed on the right). It is nice that our work gets this sort of recognition given all of the effort it has taken to get to this point. In my last blog post I wrote of the first steps towards commercialization of our technology. Since then, everything has intensified. The company funding this research collaboration with University College Dublin has now over 20 people in the USA dedicated to its commercialization – including old hands hired from well known immuno-diagnostic and pharmaceutical companies, medical doctors, engineers and sales-persons. On our side, our team has grown and now includes two software-engineers/simulators trained in part through E-CAM while they were studying theoretical physics, and four molecular biologists. In addition, contract research and manufacturing organizations are also now being engaged so as to be ready for clinical testing and scale-up when we have fully optimized our diagnostic sensors for COVID 19. Hard to believe it is only one year since we met the key commercial people. We continue to simulate various forms of the sensor so as to optimize its performance and commercialization, and for that HPC resources from PRACE partners from Ireland (ICHEC), Switzerland (CSCS) and Italy (Cineca) have been of huge help. We also are dedicating a lot of effort in software development so as to speed up our ability to estimate free energy properties such as binding affinities, which turn out to be much tricker than one might expect when proteins are very large, such as between antibodies and target antigens such as the COVID 19 spike protein. That methodology and software arose from an E-CAM pilot project – and would appear to have a potential utility way beyond our first expectations. The E-CAM Centre of Excellence grant from the EU will be finished soon (31st March). Hopefully it will emerge soon again.

Implementation of High-Dimensional Neural Network Potentials

Written by Louise Couton

Abstract

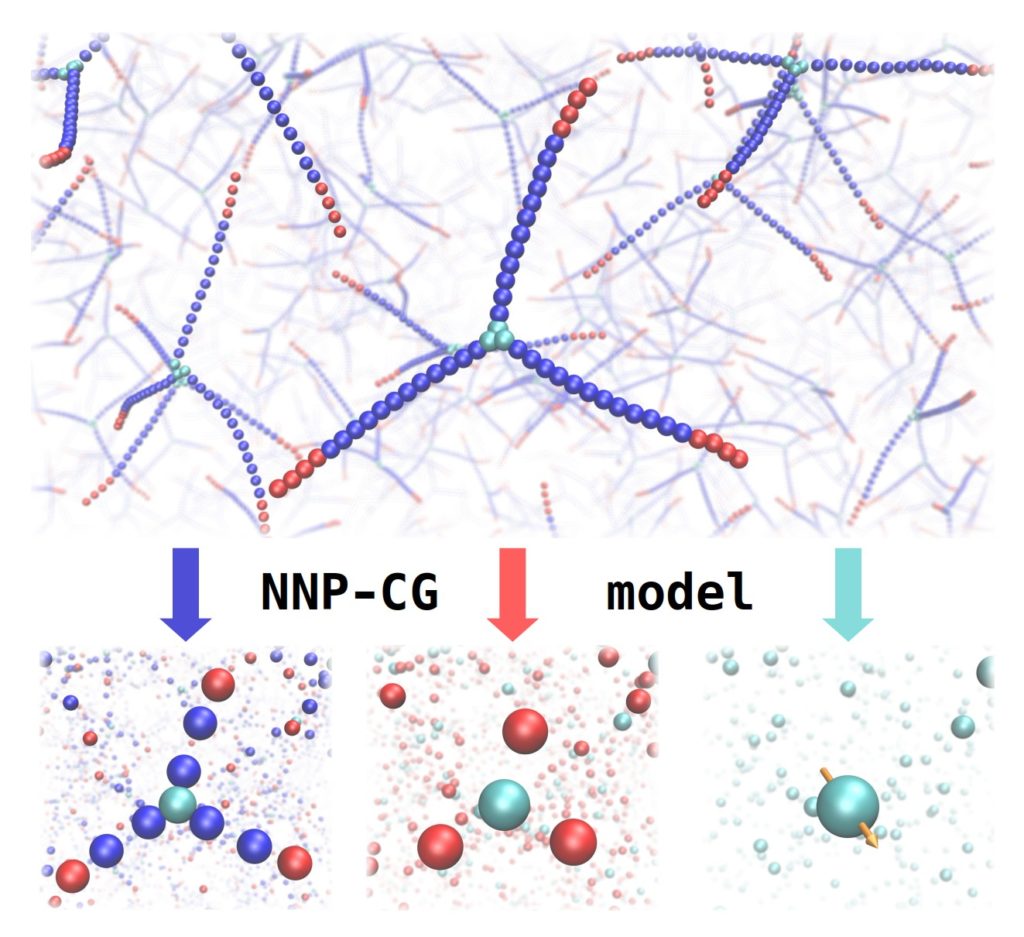

In this conversation with Andreas Singraber, post-doc in E-CAM until last month, we will discover the ensemble of his work to expand the Neural Network Potential (NNP) Package n2p2 and to improve user accessibility to the code via the LAMMPS package. Andreas will talk about new tools that he developed during his E-CAM pilot project, that can provide valuable input for future developments of NNP based coarse-grained models. He will describe how E-CAM has impacted his career and led him to recently integrate a software company as a scientific software engineer.

With Dr. Andreas Singraber, Vienna Ab initio Simulation Package (VASP)

Continue reading…Another successful online training event !

Written by Ana Catarina Fernandes Mendonça

Our last Extended Software Development Workshop (ESDW) took place on the 18th-22nd January[1], and given its length (5 days) and it’s nature (theory and hands-on training sessions) it was a real success! “The workshop went very well, participants seem to have enjoyed and they lasted until the end !”, said organiser Jony Castagna, computational scientist and E-CAM programmer at UKRI STFC Daresbury Laboratory. The event, organised at the CECAM-UK-DARESBURY Node[2], focused on HPC for mesoscale simulation, and aimed at introducing participants to Dissipative Particle Dynamics (DPD) and the mesoscale simulation package DL_MESO [3] (DL_MESO_DPD). DL_MESO is developed at UKRI STFC Daresbury by Michael Seaton, computational chemist at Daresbury and also an organiser of this event.

Another component of this workshop was parallel programming of hybrid CPU-GPU systems. In particular, DL_MESO has recently been ported to multi-GPU architectures[4] and runs efficiently up to 4096 GPUs, an effort supported by E-CAM (thank you Jony!). Part of this workshop was dedicated to theory lectures and hands-on sessions on GPU architectures and OpenACC (NVidia DLI course) given by Jony, which is an NVidia DLI Certified Instructor. He said “The intention is not only to port mesoscale solvers on GPUs, but also to expose the community to this new programming paradigm, which they can benefit from in their own fields of research”.

All sessions in this ESDW were followed by discussions and hands-on exercises. Organisers were supported by another STFC colleague and former E-CAM post-doc Silvia Chiacchiera. One of the participants wrote “Thank you so much for your effort. This workshop will cause a significant shift in my thinking and approach”.

21 people registered for to the event; but by the third day there were only 9… from which 5 lasted until the last session! A picture taken from the last session talks by itself 🙂

Do you want to join our next training event ? Check out our programme :

- 4th & 11th February: High Throughput Computing with Dask: https://www.cecam.org/workshop-details/1022

- 11th-15th October: ESDW on High performance computing for simulation of complex phenomena: https://www.cecam.org/workshop-details/1069

- 11th-22nd October: ESDW on Improving bundle libraries: https://www.cecam.org/workshop-details/23

Full calendar at https://www.e-cam2020.eu/calendar/.

References

[1] https://www.cecam.org/workshop-details/8 [2] https://www.cecam.org/cecam-uk-daresbury [3] Seaton M.A. et al. “DL_MESO: highly scalable mesoscale simulations”, Molecular Simulation 2013, 39 http://www.cse.clrc.ac.uk/ccg/software/DL_MESO/ [4] J. Castagna, X. Guo, M. Seaton and A. O’Cais, “Towards extreme scale dissipative particle dynamics simulations using multiple GPGPUs”,Computer Physics Communications, 2020, 107159

DOI: 10.1016/j.cpc.2020.107159

Proof of concept : Metamorphosis

Written by Ana Catarina Fernandes Mendonça

Abstract

The transformation of a beautiful idea born via simulation into a commercial opportunity is described as it progresses from proof of concept towards a product. At the heart of this ongoing story is advanced simulation using massively parallel computation and rare-event methods.

Continue reading…LearnHPC: dynamic creation of HPC infrastructure for educational purposes

Written by Ana Catarina Fernandes Mendonça

Abstract

In a newly successful PRACE-ICEI proposal, E-CAM, FocusCoE, HPC Carpentry and EESSI join forces to bring HPC resources to the classroom in a simple, secure and scalable way. Our plan is to reproduce the model developed by the Canadian open-source software project Magic Castle. The proposed solution creates virtual HPC infrastructure(s) in a public cloud, in this case on the Fenix Research Infrastructure, and generates temporary event-specific HPC clusters for training purposes, including a complete scientific software stack. The scientific software stack is fully optimised for the available hardware and will be provided by the European Environment for Scientific Software Installations (EESSI).

Description

EU-wide requirements for HPC training are exploding as the adoption of HPC in the wider scientific community gathers pace. However, the number of topics that can be thoroughly addressed without providing access to actual HPC resources is very limited, even at the introductory level. In cases where such access is available, security concerns and the overhead of the process of provisioning accounts make the scalability of this approach questionable.

EU-wide access to HPC resources on the scale required to meet the training needs of all countries is an objective that we attempt to address with this project. The proposed solution essentially provisions virtual HPC system(s) in a public cloud, in this case on the Fenix Research Infrastructure. The infrastructure will dynamically create temporary event-specific HPC clusters for training purposes, including a scientific software stack. The scientific software stack will be provided by the European Environment for Scientific Software Installations (EESSI) which uses a software distribution system developed at CERN, CernVM-FS, and makes a research-grade scalable software stack available for a wide set of HPC systems, as well as servers, desktops and laptops (including MacOS and Windows!).

The concept is built upon the solution of Compute Canada, Magic Castle, which aims to recreate the Compute Canada user experience in public clouds (there is even a presentation where the main developer creates a cluster just by talking to his phone!). Magic Castle uses the open-source software Terraform and HashiCorp Language (HCL) to define the virtual machines, volumes, and networks that are required to replicate a virtual HPC infrastructure.

In addition to providing a dynamically provisioned HPC resource, the project will also offer a scientific software stack provided by EESSI. This model is also based on a Compute Canada approach and enables replication of the EESSI software environment outside of any directly related physical infrastructure.

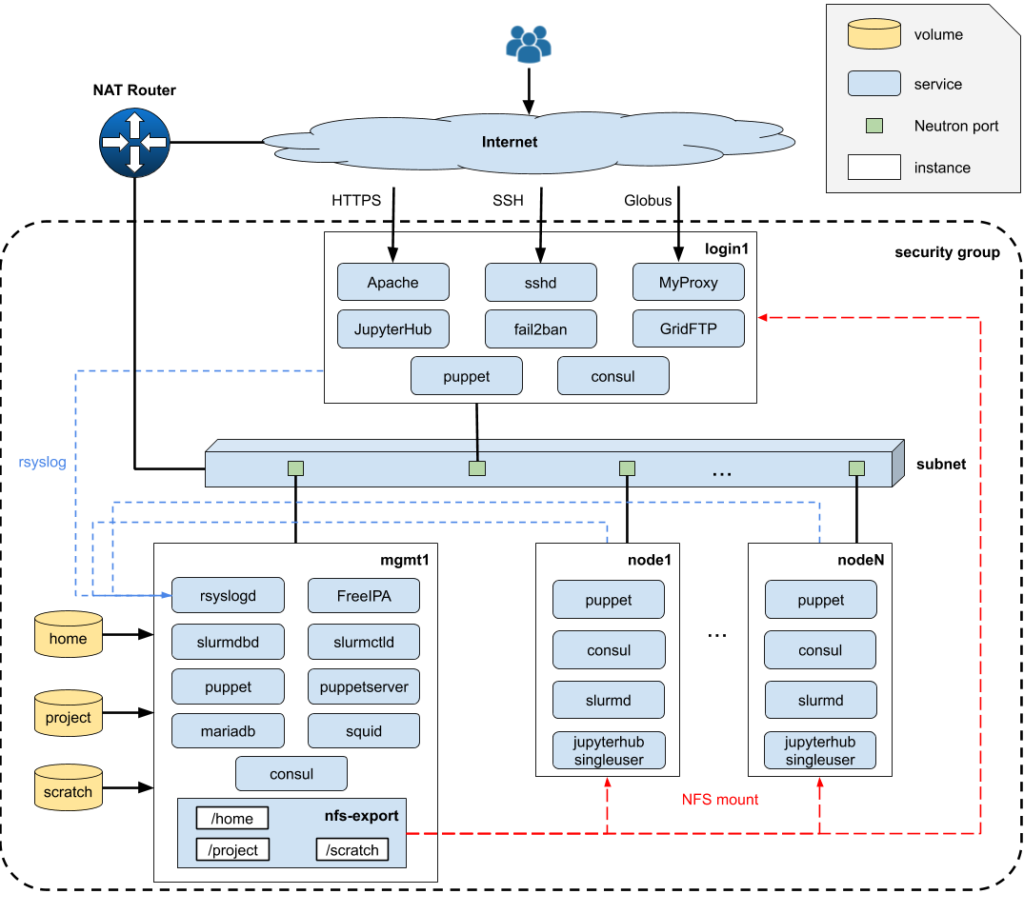

Our adaption of Magic Castle aims to recreate the EESSI HPC user experience, for training purposes, on the Fenix Research Infrastructure. After deployment, the user is provided with a complete HPC cluster software environment including a Slurm scheduler, a Globus Endpoint, JupyterHub, LDAP, DNS, and a wide selection of research software applications compiled by experts with EasyBuild.

The architecture of the solution is best represented by the graphic below (taken from the Compute Canada documentation at https://github.com/ComputeCanada/magic_castle/tree/master/docs):

With the resources made available to the project, we plan to run 6 HPC training events from January to July 2021. These training events are connected to the Centres of Excellence E-CAM and FocusCoE and with HPC Carpentry.

Comics & Science ? The E-CAM issue: an experiment in dissemination

Written by Ana Catarina Fernandes Mendonça

The E-CAM issue of Comics & Science has just been released on-line…and it’s just the beginning of the adventure!

Identifying exciting and original tools to engage the general public with advanced research is an intriguing and non-trivial challenge for the scientific community. E-CAM decided to try something unusual, and embarked on an interesting and slightly bizarre experience: collaborating with experts and artists to use comics to talk about HPC and simulation and modelling!

The adventure started when CECAM Deputy Director and E-CAM Work-Package leader Sara Bonella visited the CNR Institute for applied mathematics “Mauro Picone” (Cnr-Iac), in Rome, and became acquainted with the work of Comics&Science, a magazine published by CNR Edizioni to promote the relationship between science and entertainment. The magazine was created in 2013 by Roberto Natalini, Director of the Cnr-Iac, and Andrea Plazzi, author and editor with a scientific background and active in the field of comics.

Adopting the unique language of the comics, Comics&Science communicates science in a funny and understandable way via original stories that are always edited by some of the best authors and cartoonists in town. For the E-CAM issue, we had the good fortune to collaborate with Giovanni Eccher, comics writer and scriptwriter for movies and animations, and Sergio Ponchione, illustrator and cartoonist.

Giovanni and Sergio created for us the unique story of Ekham the wise, a magnificent witch that – with an accurate model and the help of a High Performance Cauldron (!) – enables Prince Variant to defeat the fearful Dragon that has kidnapped Princess Beauty. As usual, the King had promised the Princess’s hand to the vanquisher of the dragon, but things don’t turn out exactly as expected…

In addition to the comics, the E-CAM issue of Comics&Science presents several articles describing – in a language targeted at young adults, and, in general, lay public – what are simulations in advanced research and the role of High Performance Computing. The issue also contains a statement from the European Commission on its vision for HPC. We are very grateful to our authors, that include Ignacio Pagonabarraga, Catarina Mendonça, Sara Bonella, Christoph Dellago, and Gerhard Sutmann, for playing with us.

The issue has been produced in partnership with CECAM, coordinator of E-CAM, and the longest standing institution promoting fundamental research on advanced computational methods.

The E-CAM issue of Comics&Science is freely available on our website at https://www.e-cam2020.eu/e-cam-issue-of-comics-science/. Should you wish to use this new toy to promote modelling and simulation, get in touch at info@e-cam2020.eu and let us know about your plans: we are happy to share the material provided that provenance is acknowledged.

The “first outing” of the E-CAM issue of Comics&Science took place on Friday 30 October at 14:15 CET with a presentation (in Italian) in the on-line programme of the 2020 Lucca Comics&Games Festival. A recording of that moment is available at https://www.youtube.com/watch?v=BUysRG0zlCk.

Enjoy the read and, most importantly, have fun 🙂

©2020 This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License (CC BY-NC-SA 4.0).