Abstract

Scalability of parallel applications depends on a number of characteristics, among which is efficient communication, equal distribution of work or efficient data lay-out. Especially for methods based on domain decomposition, as it is standard for, e.g., molecular dynamics, dissipative particle dynamics or particle-in-cell methods, unequal load is to be expected for cases where particles are not distributed homogeneously, different costs of interaction calculations are present or heterogeneous architectures are invoked, to name a few. For these scenarios the code has to decide how to redistribute the work among processes according to a work sharing protocol or to dynamically adjust computational domains, to balance the workload. The A Load Balancing Library (ALL) developed within E-CAM at the Julich Supercomputing Center aims to provide an easy and portable way to include dynamic domain-based load balancing into particle based simulation codes. It provides several schemes to find the ideal split of the workload, from the simplest orthogonal non staggered domain decomposition, to the more fancy Voronoi mesh scheme. Within this text we provide an overview of ALL, its capabilities and current use cases, as well as where to find additional information on the library.

Latest release is 0.9.1 and can be found under: https://gitlab.version.fz-juelich.de/SLMS/loadbalancing/-/releases

Description

Most modern parallelized (classical) particle simulation programs are based on a spatial decomposition method as an underlying parallel algorithm: different processors administrate different spatial regions of the simulation domain and keep track of those particles that are located in their respective region. Processors exchange information

- in order to compute interactions between particles located on different processors

- to exchange particles that have moved to a region administered by a different processor.

This implies that the workload of a given processor is very much determined by its number of particles, or, more precisely, by the number of interactions that are to be evaluated within its spatial region.

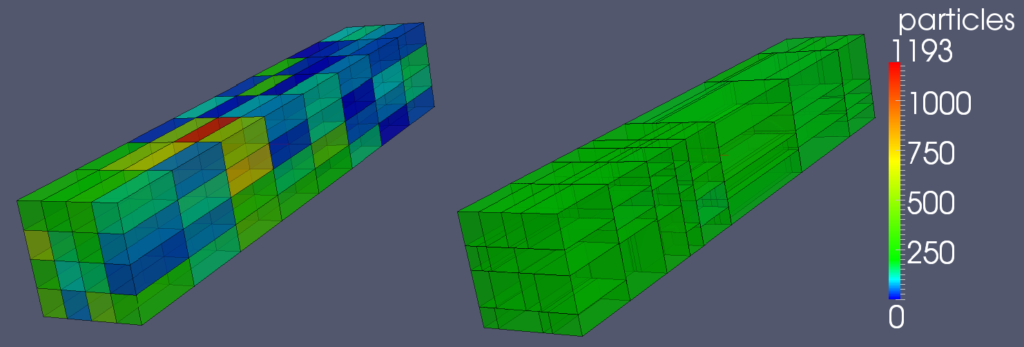

Certain systems of high physical and practical interest (e.g. condensing fluids) dynamically develop into a state where the distribution of particles becomes spatially inhomogeneous. Unless special care is being taken, this results in a substantially inhomogeneous distribution of the processors’ workload. Since the work usually has to be synchronized between the processors, the runtime is determined by the slowest processor (i.e. the one with the highest workload). In the extreme case, this means that a large fraction of the processors are idle during these waiting times. This problem becomes particularly severe if one aims at strong scaling, where the number of processors is increased at constant problem size: Every processor administrates smaller and smaller regions and therefore inhomogeneities will become more and more pronounced. This will eventually saturate the scalability of a given problem, already at a processor number that is still so small that communication overhead remains negligible.

The solution to this problem is the inclusion of dynamic load balancing techniques. These methods redistribute the workload among the processors, by lowering the load of the most busy cores and enhancing the load of the most idle ones. Fortunately, several successful techniques are known already to put this strategy into practice. Nevertheless, dynamic load balancing that is both efficient and widely applicable implies highly non-trivial coding work. Therefore it has not yet been implemented in a number of important codes.

The A Load-Balancing Library (ALL) developed within E-CAM at the Simulation Laboratory Molecular Systems of the Juelich Supercomputing Centre, aims to provide an easy and portable way to include dynamic domain-based load balancing into particle based simulation codes. It was created in the context of an Extended Software Development Workshop (ESDW) within E-CAM (see ALL ESDW event details), where code developers of CECAM community codes were invited together with E-CAM postdocs, to work on the implementation of load balancing strategies. The goal of this activity is to increase the scalability of applications to a larger number of cores on HPC systems, for spatially inhomogeneous systems, and thus to reduce the time-to-solution of the applications .

ALL includes several load-balancing schemes, with additional approaches currently being added. The following list gives an overview about the currently included schemes:

- Tensor-Product method: For the Tensor-Product method, the work on all processes (subdomains) is reduced over the cartesian planes in the systems. This work is then equalized by adjusting the borders of the cartesian planes.

- Staggered Grid Method: For the staggered-grid scheme, a 3-step hierarchical approach is applied: work over the Cartesian planes is reduced before the borders of these planes are adjusted; in each of the Cartesian planes the work is reduced for each Cartesian column, these columns are then adjusted to each other to homogenise the work in each column; the work between neighbouring domains in each column is adjusted. Each adjustment is done locally with the neighbouring planes, columns or domains by adjusting the adjacent boundaries.

- Unstructured Mesh Method: In contrast to the Tensor-Product method and the Staggered Grid Method, the unstructured mesh method adjusts domains not by moving boundaries but vertices, i.e. corner points, of domains. For each vertex, a force, based on the differences in work of the neighboring domains, is computed and the vertex is shifted in a way to equalize the work between these neighboring domains.

- Voronoi Mesh Method: Similar to the topological mesh method (Unstructured Mesh Method), the Voronoi mesh method computes a force, based on work differences. In contrast to the topological mesh method, the force acts on a Voronoi point rather than a vertex, i.e. a point defining a Voronoi cell, which describes the domain. Consequently, the number of neighbors is not a conserved quantity, i.e. the topology may change over time.

- Histogram-based Staggered Grid Method: The histogram-based staggered-grid scheme results in the same grid as the staggered-grid scheme (see Staggered Grid Method), this scheme uses the cumulative work function in each of the three cartesian directions in order to generate this grid. Using histograms and the previously defined distribution of process domains in a cartesian grid, this scheme generates in three steps a staggered-grid result, in which the work is distributed as evenly as the resolution of the underlying histogram allows. In contrast to the other schemes this scheme depends on a global exchange of work between processes.

Use cases

ALL is being tested with the HemeLB code[1] from the Centre of Excellence CompBiomed. A recent paper describes how HemeLB’s developments in memory management and load balancing (with ALL) allow near linear scaling performance of the code on hundreds of thousands of computer codes[2].

ALL is implemented in the multi-GPU version of DL_MESO_DPD package (see related news item here). The intention of this integration is to allow for better performance when modelling complex systems with DL_MESO_DPD[3], like large proteins or lipid bilayers, redistributing the work load across the GPUs.