Organisers

- Rene Halver[1]

- Stephan Schulz[1][2]

- Godehard Sutmann[1][2]

[1] Julich Supercomputing Centre, Forschungszentrum Julich, Germany

[2] Interdisciplinary Centre for Advanced Materials Simulation (ICAMS), University of Bochum, Germany

Description

Online event. Programme and registration available through the CECAM website for the event at https://www.cecam.org/workshop-details/1021

Scalability of parallel applications depends on a number of characteristics, among which is efficient communication, equal distribution of work or efficient data lay-out. Especially for methods based on domain decomposition, as it is standard for, e.g., molecular dynamics, dissipative particle dynamics or particle-in-cell methods, unequal load is to be expected for cases where particles are not distributed homogeneously, different costs of interaction calculations are present or heterogeneous architectures are invoked, to name a few. For these scenarios the code has to decide how to redistribute the work among processes according to a work sharing protocol or to dynamically adjust computational domains, to balance the workload.

The seminar will provide an overview about motivation, ideas for various methods and implementations on the level of tensor product decomposition, staggered grids, non-homogeneous mesh decomposition and a recently developed phase field approach. An implementation of several methods into the load balancing library ALL, which has been developed in the Centre of Excellence E-CAM, is presented. A use case is shown for the Materials Point Method (MPM), which is an Euler-Lagrange method for materials simulations on the macroscopic level, solving continuous materials equations.

The seminar is organised in three main parts:

- Overview of Load Balancing

- The ALL Load Balancing Library

- Balancing the Materials Point Method with ALL

The event will start at 14:00 CET on the 11th December 2020, and is expected to last 2h. It will run online via Zoom for registered participants, and it will be live streamed via YouTube at https://youtu.be/-LCDEnYoFiQ.

Organisers

- Jony Castagna[1]

- Michael Seaton[1]

- Silvia Chiacchiera[1]

- Leon Petit[1]

[1]STFC Daresbury Laboratory, United Kingdom

Description

Online event. Programme and registration available through the CECAM website for the event at https://www.cecam.org/workshop-details/8

Mesoscale simulations have grown recently in importance due to their capacity of capturing molecular and atomistic effects without having to solve for a prohibitively large number of particles needed in Molecular Dynamic (MD) simulations. Different approaches, emerging from a coarse approximation to a group of atoms and molecules, allow reproducing both chemical and physical main properties as well as continuum behaviour such as the hydrodynamics of fluid flows.

One of the most common techniques is the Dissipative Particle Dynamics (DPD): an approximate, coarse-grain, mesoscale simulation method for investigating phenomena between the atomic and the continuum scale world, like flows through complex geometries, micro fluids, phase behaviours and polymer processing. It consists of an off-lattice, discrete particle method similar to MD but with replacement of a soft potential for the conservative force, a random force to simulate the Brownian motion of the particles and a drag force to balance the random force and conserve the total momentum of the system.

However, real applications usually consist of a large number of particles and despite the coarse grain approximation, compared to MD, High Performance Computing (HPC) is often required for simulating systems of industrial and scientific interest. On the other hand, today’s hardware is quickly moving towards hybrid CPU-GPU architectures. In fact, five of the top ten supercomputer are made of mixed CPU and NVidia GPU accelerators which allow to achieve hundreds of PetaFlops performance. This type of architecture is also one of the main paths toward Exascale.

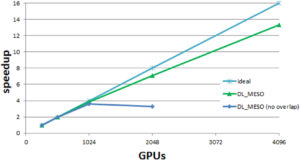

Few software, like DL_MESO, userMESO and LAMMPS, can currently simulate large DPD simulations. In particular, DL_MESO has recently been ported to multi-GPU architectures and runs efficiently up to 4096 GPUs. This allows investigating very large system with billions of particles within affordable computational effort. However, additional effort is required to enable the current version to cover more complex physics, like long range forces as well as achieving higher parallel computing efficiency.

The purpose of this Extended Software Development Workshop (ESDW) is to introduce students to the parallel programming of hybrid CPU-GPU systems. The intention is not only to port mesoscale solvers on GPUs, but also to expose the community to this new programming paradigm, which they can benefit from in their own fields of research. See more details about the topics covered by this ESDW under tab “Program”.

References

- Seaton M.A. et al. “DL_MESO: highly scalable mesoscale simulations” Molecular Simulation (39) 2013

- Castagna J. et al “Towards Extreme Scale using Multiple GPGPUs in Dissipative Particle Dynamics Simulations”, The Royal Society poster session on Numerical algorithm for high performance computational science” (2019)

- Castagna J. et al. “Towards extreme scale dissipative particle dynamics simulations using multiple GPGPUs”, Computer Physics Communications (2020) 107159, https://doi.org/10.1016/j.cpc.2020.107159

Organisers

- Alan O’Cais (Jülich Supercomputing Centre)

- David Swenson (École normale supérieure de Lyon)

Online event. More information and registration available through the CECAM website for the event at https://www.cecam.org/workshop-details/1022

Dask is a powerful Python tool for task-based computing. The Dask library was originally developed to provide parallel and out-of-core versions of common data analysis routines from data analysis packages such as NumPy and Pandas. However, the flexibility and usefulness of the underlying scheduler has led to extensions that enable users to write custom task-based algorithms, and to execute those algorithms on high-performance computing (HPC) resources.

This workshop will be a series of virtual seminars/tutorials on tools in the Dask HPC ecosystem. The event will run online via Zoom for registered participants (“participate” tab) and it will be live streamed via YouTube at https://youtube.com/playlist?list=PLmhmpa4C4MzZ2_AUSg7Wod62uVwZdw4Rl.

Programme:

- 21 January 2021, 3pm CET (2pm UTC): Dask – a flexible library for parallel computing in Python

YouTube link: https://youtu.be/Tl8rO-baKuY - 4 February 2021, 3pm CET (2pm UTC): Dask-Jobqueue – a library that integrates Dask with standard HPC queuing systems, such as SLURM or PBS

YouTube link: https://youtu.be/iNxhHXzmJ1w - 11 February 2021, 3pm CET (2pm UTC) : Jobqueue-Features – a library that enables functionality aimed at enhancing scalability

YouTube link: https://youtu.be/FpMua8iJeTk

Registration is not required to attend, but registered participants will receive email reminders before each seminar.

Organisers

- Andrea Cavalli (Italian Institute of Technology)

- Sergio Decherchi (Italian Institute of Technology)

- Marco Ferrarotti (Istituto Italiano di Tecnologia)

- Walter Rocchia (Istituto Italiano di Tecnologia)

Description

Methods for simulating complex phenomena are increasingly becoming an accepted mean of pursuing scientific discovery. Especially in molecular sciences, simulation methods have a key role. They allow to perform virtual experiments and to estimate observables of interest for a wide range of complex phenomena such as proteins conformational changes, reactions and protein-ligand binding processes. Means to achieve these goals are continuum modeling (e.g. Poisson-Boltzmann equation1), meso-scale methods2 or more accurate full atomistic simulations either classical3 or at quantum level of theory4. All these techniques have the common requirements of solving complex equations that call for adequate computing resources.

Modern computing units are inherently parallel machines where multiple multi-core CPUs often paired with one or many accelerators such as GPUs or, more recently, FPGA devices. In this context, High performance computing (HPC) is the computer science discipline that specifically addresses the task of optimizing the performance of software through code refactoring, single/multi thread/process optimization. Despite several excellent codes already exist, the requirement of properly accelerating simulative codes is still compelling with several engines not leveraging the actual capabilities of current architectures.

The aim of this E-CAM Extended Software Development Workshop (ESDW) is to introduce the participants to HPC through frontal lessons on computer architectures, applications and via hands-on sessions where participants will plan a suitable optimization strategy for one of the selected codes and start optimizing its performance.

This ESDW will thus focus on the technological aspects in HPC optimization/parallelization procedures. It will take place in Genoa, town where the Italian Institute of Technology is located. The venue will be hotel Tower Genova Airport Hotel & Conference Center, and the workshop will last one week (5 days ).

The primary goal of the workshop will be to show to the participants which are the main challenges in code optimization and parallelization and the correct balance between code readability, long term maintenance and performance. This will include technical lessons in which parallelization paradigms are explained in detail. A second goal will be to allow participants to find computational bottlenecks within software and to setup an optimization/parallelization strategy including some initial optimization on the selected codes. This activity will be interleaved with talks that present examples of HPC oriented applications through invited speakers.

The lessons will cover:

Modern computing machinery architectures

Code refactoring and single thread optimizations

Shared memory architectures and parallelization

Multi-process parallelization

GPU oriented parallelization

Applications

We will select two/three codes among the ones proposed by the applicants during the registration procedure. Each applicant can apply either proposing a code or not. Codes selection will be based on the code quality, wide interest for the ECAM Community and the commitment of the proposers to carry on (IIT HPC group will support this activity) a long term optimization project beyond the ECAM workshop timeframe.

We will issue a first call for codes-bringing applicants and for codes-agnostic applicants that will participate irrespectively of the selected codes. Then, once the two/three codes are selected a second call will be issued for applicants only. Applicants proposing a code are supposed to know in detail the software internals, its usage and to have prepared proper testing/benchmarking input files; codes can be in C/C++/Fortran or even in Python for an initial porting. Ideally, the code should be serial in order to plan a full optimization strategy. All the attendees are supposed to bring their laptops to remotely access the IIT Cluster (64 nodes, GPUs equipped) during the hands-on sessions.

References

(1) Decherchi, S.; Colmenares, J.; Catalano, C. E.; Spagnuolo, M.; Alexov, E.; Rocchia, W. Between Algorithm and Model: Different Molecular Surface Definitions for the Poisson-Boltzmann Based Electrostatic Characterization of Biomolecules in Solution. Commu. Comput. Phys. 2013, 13 (1), 61–89. https://doi.org/10.4208/cicp.050711.111111s.

(2) Succi, S.; Amati, G.; Bonaccorso, F.; Lauricella, M.; Bernaschi, M.; Montessori, A.; Tiribocchi, A. Towards Exascale Design of Soft Mesoscale Materials. Journal of Computational Science 2020, 101175. https://doi.org/10.1016/j.jocs.2020.101175.

(3) Dror, R. O.; Dirks, R. M.; Grossman, J. P.; Xu, H.; Shaw, D. E. Biomolecular Simulation: A Computational Microscope for Molecular Biology. Annu. Rev. Biophys. 2012, 41 (1), 429–452. https://doi.org/10.1146/annurev-biophys-042910-155245.

(4) Car, R.; Parrinello, M. Unified Approach for Molecular Dynamics and Density-Functional Theory. Phys. Rev. Lett. 1985, 55 (22), 2471–2474. https://doi.org/10.1103/PhysRevLett.55.2471.

Organisers

- Nick R. Papior

Technical University of Denmark, Denmark - Micael Oliveira

Max Planck Institute for the Structure and Dynamics of Matter, Hamburg, Germany - Yann Pouillon

Universidad de Cantabria, Spain - Volker Blum

Duke University, Durham, NC, USA, USA - Fabiano Corsetti

Synopsys QuantumWise, Denmark - Emilio Artacho

University of Basque Country, United Kingdom

Description

Programme and registration available through the CECAM website for the event at https://www.cecam.org/workshop-details/23

The landscape of Electronic Structure Calculations is evolving rapidly. On one hand, the adoption of common libraries greatly accelerates the availability of new theoretical developments and can have a significant impact on multiple scientific communities at once [LibXC, PETSc]. On the other hand, electronic-structure codes are increasingly used as “force drivers” within broader calculations [Flos,IPi], a use case for which they have initially not been designed. Recent modelling approaches designed to address limitations with system sizes, while preserving consistency with what is currently available, have also become relevant players in the field. For instance, Second-Principles Density Functional Theory [SPDFT], a systematic approximation built on top of the First-Principles DFT approach, provides a similar level of accuracy to the latter and makes it possible to run calculations on more than 100,000 atoms [ScaleUp, Multibinit]. At a broader level, the European Materials Modelling Council (EMMC) has been organizing various events to establish guidelines and roadmaps around the collaboration of Academy and Industry, to meet prominent challenges in the modelling of realistic systems and the economic sustainability of such endeavours, as well as proposing new career paths for people with hybrid scientific/software engineer profiles [EMMC1,EMMC2].

All these trends further push the development of electronic-structure software more and more towards the provision of standards, libraries, APIs, and flexible software components. At a social level, they are also bringing different communities together and reinforce existing collaborations within the communities themselves. Ongoing efforts include an increasing part of coordination of the developments, enhanced integration of libraries into main codes, and consistent distribution of the software modules. They have been made possible in part by the successful adaptation of Lean, Agile and DevOps approaches to the context of scientific software development and the construction of highly-automated infrastructures [EtsfCI, OctopusCI, SiestaPro]. A key enabler in all this process has been the will to get rid of the former silo mentality, both at a scientific level (one research group, one code) as well as a business model level (libre software vs. open-source vs. proprietary), allowing collaborations between communities and making new public-private partnerships possible.

In this context, an essential component of the Electronic Structure Library [esl, els-gitlab] is the ESL Bundle, a consistent set of libraries broadly used within the Electronic Structure Community that can be installed together. This bundle solves various installation issues for end users and enables a smoother integration of the shipped libraries into external codes. In order to maintain the compatibility of the bundle with the main electronic-structure codes on the long run, its development has been accompanied by the creation of the ESL Steering Committee, which includes representatives of both the individual ESL components and the codes using them. As a consequence, the visibility of the ESL expands and the developers are exposed to an increasing amount of feedback, as well as requests from third-party applications. Since many of these developers are contributing to more than one software package, this constitutes an additional source of pressure, on top of research publications and fundraising duties, that is not trivial to manage.

Establishing an infrastructure that allows code developers to efficiently act upon the feedback received and still guarantee the long-term usability of the ESL components, both individually and as a bundle, has become a necessary step. This requires an efficient coordination between various elements:

Set up a common and consistent code development infrastructure / training in terms of compilation, installation, testing and documentation, that can be used seamlessly beyond the electronic structure community, and learn from solutions adopted by other communities.

Agree on metadata and metrics that are relevant for users of ESL components as well as third-party software, not necessarily related to electronic structure in a direct way.

Creating long-lasting synergies between stakeholders of all communities involved and making it attractive for Industry to contribute.

Since 2014, the ESL has been paving the way towards broader and broader collaborations, with a wiki, a data-exchange standard, refactoring code of global interest into integrated modules, and regularly organising workshops, within a wider movement lead by the European eXtreme Data and Computing Initiative [exdci].

References

[EMMC1] https://emmc.info/events/emmc-csa-expert-meeting-on-coupling-and-linking/

[EMMC2] https://emmc.info/events/emmc-csa-expert-meeting-sustainable-economic-framework-for-materials-modelling-software/

[EtsfCI] https://www.etsf.eu/resources/infrastructure

[Flos] https://github.com/siesta-project/flos

[IPi] https://github.com/i-pi/i-pi

[LibXC] https://tddft.org/programs/libxc/

[Multibinit] https://ui.adsabs.harvard.edu/abs/2019APS..MARX19008R/abstract

[OctopusCI] https://octopus-code.org/buildbot/#/

[PETSc] https://www.mcs.anl.gov/petsc/

[ScaleUp] https://doi.org/10.1103/PhysRevB.95.094115

[SiestaPro] https://www.simuneatomistics.com/siesta-pro/

[SPDFT] https://doi.org/10.1088/0953-8984/25/30/305401

[esl] http://esl.cecam.org/

[esl-gitlab] http://gitlab.e-cam2020.eu/esl

[exdci] https://exdci.eu/newsroom/press-releases/exdci-towards-common-hpc-strategy-europe